Complaints to the IT team about poor performance speeds from essential business applications are not new. However, the feedback is frequently vague and typically amounts to little more than “It’s very slow!”, which offers limited insight for those attempting to diagnose the issue.

In the expanding hybrid working landscape, many more people work outside traditional office settings. Organizations are deploying more cloud-based applications and adopting “everything-as-a-service” options from cloud platforms. In this world, poor network performance and latency issues are detrimental to good productivity.

System admins may shudder when they get a report that the network is slow. But on the rare occasions when performance and latency issues for remote workers get investigated, it’s frequently the case that excessive packet loss on the remote network is a root cause. The performance issues are due to communicating endpoints having to request and wait for resends of network packets and latency that never arrived.

In this article, we summarize the causes of packet loss, the impact this has on collaboration, and how there are now innovative solutions that can drastically reduce the problem, deliver respite to your systems admins from vague problem reporting, and provide your hybrid workforce with an office equivalent network experience. We also link to more detailed resources that take a deeper dive into the problem and how an independent test shows that Cloudbrink’s solution provides up to a 30x improvement in network connectivity for remote users.

Defining Network Latency and Packet Loss

Network latency is the delay that happens during data transmission over a network. For basic latency, the distance that the data needs to travel is directly proportional to the latency. In real-time applications such as video conferencing and VoIP, latency is particularly important. Any delays during communication can significantly impact the quality of the communication.

Packet loss refers to the failure of data packets to reach their destination, which is a common issue on both wired and wireless networks. Various factors, such as network congestion, hardware issues, interference on wireless networks, and VPN bandwidth limitations, can cause packet loss. This can worsen the effects of latency, especially in situations where data has to travel long distances.

How much packet loss is bad?

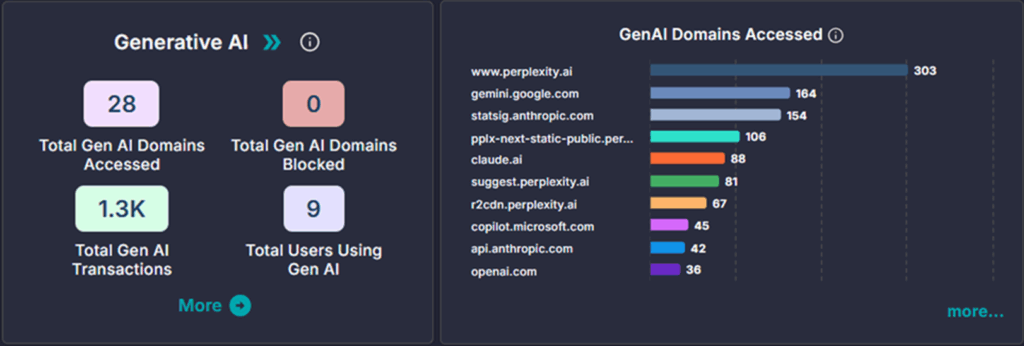

As illustrated in the simplified diagram below, modern hybrid workers — whether occasionally or always working remotely — are much more likely to encounter significant packet loss issues on Wi-Fi networks they use at home, in hotels, coffee shops, or other locations.

The Impacts of Packet Loss and Increased Latency

Packet loss can cause significant problems that impact various aspects of communication. In real-time applications such as cloud video conferencing, the absence of some packets results in poor-quality visuals, unclear audio, and annoying delays. This can be frustrating for everyone involved and can hinder productive collaboration. Imagine trying to brainstorm with colleagues who appear pixelated and whose voices lag behind the conversation. It’s hardly conducive to a productive meeting.

Latency dictates that network packets take time to reach their destination endpoints. If not all transmitted packets reach their destination, the missing ones must be resent. The greater the distance between the end user and the application, the higher the latency. While a slight lag from the VPN might be barely noticeable for users who are logically close to the applications in terms of network transmission times, high latency and packet loss can turn simple tasks into tedious exercises in waiting for anyone using a remote or slow network connection.

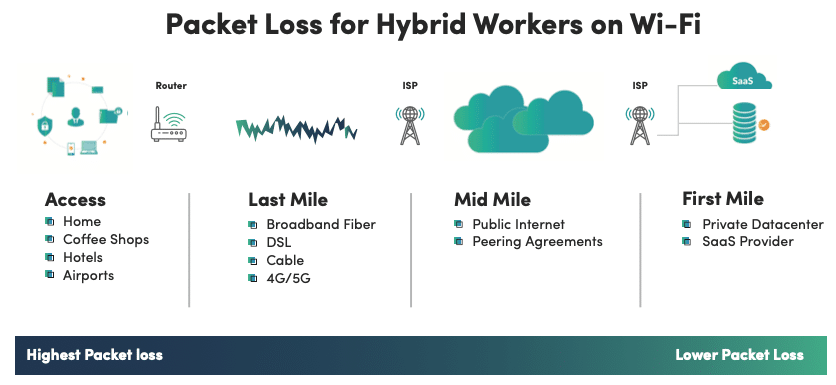

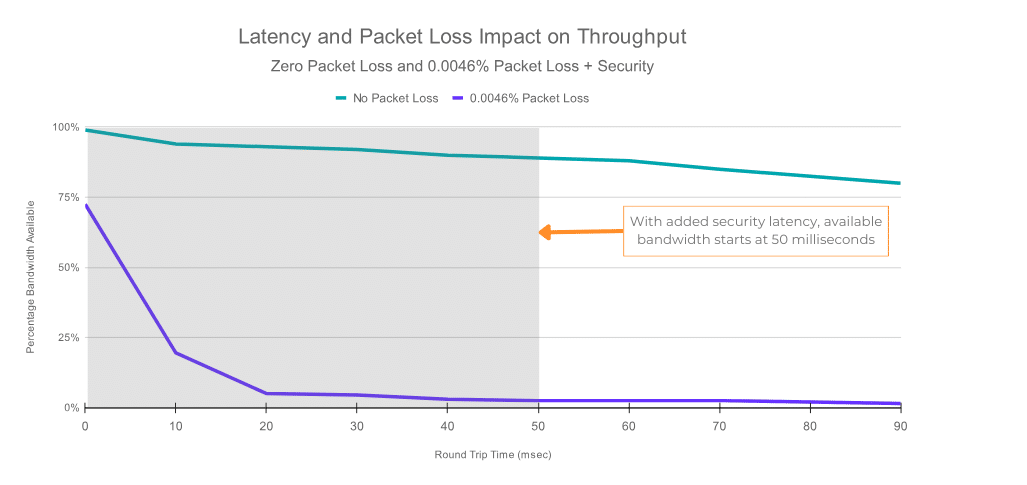

The images below show how the combined effects of network latency (round trip time) and packet loss have on the Percentage of Available Bandwidth that can be used.

As rapid as the drop-off in Image 2 is, it doesn’t tell the whole story. Most organizations have security infrastructure deployed on their remote access solutions to check network traffic for malicious activity. Most cybersecurity solutions that perform this monitoring add a significant latency overhead to connections even before the regular drop-off shown in image 2. The typical overhead is 50ms. When this is taken into account we get the situation shown in image 3. where the best performance they can get from their network with just 0.0046% packet loss is 2% of the available bandwidth. This is why quality in a Teams or Zoom meeting can be terrible or a file transfer can be taking forever and yet a speed test shows plenty of available bandwidth.

What this means in practice is that in a typical use case almost all of the theoretically available bandwidth is wiped out due to the added latency overhead from the security tools when there is a very small amount of packet loss.

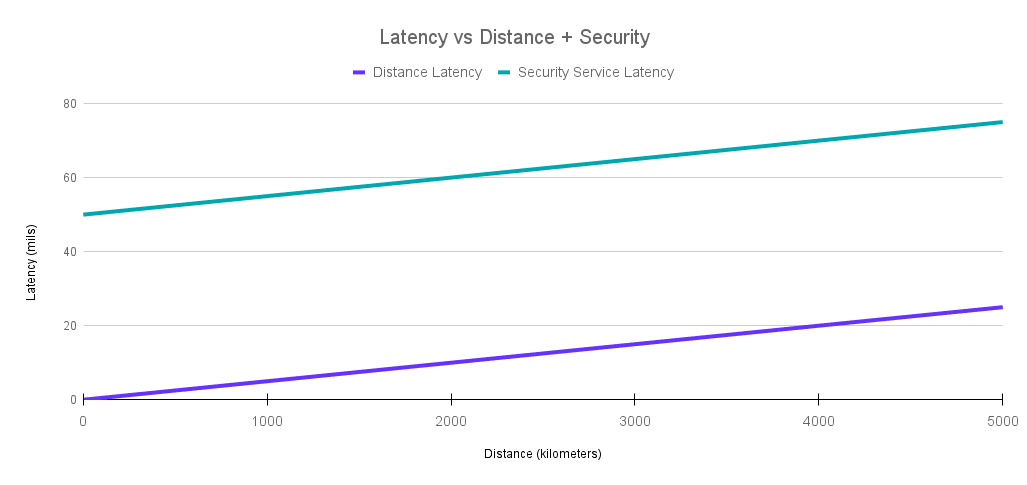

This 50ms security service latency overhead is typically linear as network distance increases. Image 4 shows this in chart form. The 50ms overhead combines with distance latency and translates to approximately 63ms at 2,500 km and 75ms at 5,000 km.

How to Overcome the Impact of Latency and Packet Loss

Most of us have experienced the issue of latency degrading our multimedia-rich business applications. As we now know, we have also likely experienced the impacts of packet loss. Legacy network protocols and layered security were not designed for our modern cloud-centric and hybrid world.

Cloudbrink has identified and analyzed the common problems faced on networks and developed a new technology stack to address them. By employing some of the networking industry’s best experts, Cloudbrink has designed a system that effectively tackles the challenges of latency and packet loss.

The solution involves deploying FAST edges and a preemptive and accelerated packet recovery strategy. FAST edges are software instances placed in cloud data centers close to users, reducing the distance data needs to travel and thereby reducing end-to-end latency on the last section of its journey. The Cloudbrink software stack, along with these FAST edges, enables per-hop recovery, which minimizes the distance that impaired traffic has to traverse throughout the network.

Cloudbrink uses the Brink Protocol, which comes with built-in AI and ML capabilities. The Brink Protocol enables Cloudbrink to recover lost packets quickly and proactively while optimizing performance and improving user experience. It uses context to make per-hop decisions that enable rapid packet retrieval. By applying these mechanisms, Cloudbrink reduces end-to-end delay and enhances the overall quality of user experience.

NEW: Download the Free Packet Loss tool from Cloudbrink

Conclusion

Cloudbrink tackles the performance-destroying combination of network latency and packet loss. Independent industry analyst studies of Cloudbrink in action show that the claim to boost network performance throughput by 30x is actually an understatement in many instances. Real-world performance gains from packet loss and latency issues are frequently in excess of 30x.

Under promise and over-deliver was a maxim Steve Jobs often cited. Cloudbrink is happy to adopt this maxim when delivering network performance boosts for organizations of all sizes. Talk to Cloudbrink today, and follow the links below, to find out more and improve the network performance experience of your staff and customers.